Wiki » History » Version 34

« Previous -

Version 34/74

(diff) -

Next » -

Current version

Luning, 12/02/2014 12:40 AM

The International Cognitive Ability Resource (ICAR)

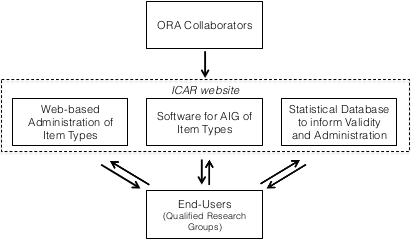

ICAR, a public-domain and opensource tool, is to provide a large and dynamic bank of cognitive ability measures for use in a wide variety of applications. By encouraging the use, revision and ongoing development of these measures among qualified research groups around the world, the ICAR will further understanding about the holistic structure of cognitive abilities as well as the nature of associations between cognitive ability constructs and other variables. ICAR will build heavily on automatic item generation techniques, which yield items with predictable psychometric qualities. The item generators developed for the ICAR will be distributed as functions in psychometrically-informed, easily implemented, open-source software. In addition, suitable statistical methodology will be developed and subprojects will use ICAR items to explore specific research questions. The impact of this international collaboration will be the creation of a platform for more standardized assessment and more rapid scientific progress among the disciplines and research groups around the world which use cognitive ability measures to diagnose impairments, evaluate the correlates of various abilities and predict important life outcomes.

ICAR Item Types (You need to register online and fill in the registration form to gain access)

At the moment, there are four item types included among the ICAR set:

- Three-Dimensional Rotation

- Letter and Number Series

- Matrix Reasoning

- Verbal Reasoning

- Figural analogies

- Object recognition

- Facial identity recognition

- Facial expression recognition

- Corpora-based questions

Testing Platform

Concerto is an open-source online testing platform developed by the Psychometrics Centre, University of Cambridge. The platform is based on the R statistical language, allowing users to create various online assessments, ranging from simple surveys to complex IRT-based adaptive tests. Composed of open-source components (HTML, SQL, and R) the system is regularly updated and improved by its community of users. For more information about concerto please visit http://www.psychometrics.cam.ac.uk/newconcerto.

About us

Core teams

- Prof. William Revelle and David Condon from Northwestern University, United States

- Dr. Philipp Doebler, Prof. Heinz Holling and Ehsan Masoudi from University of Münster, Germany

- Prof. John Rust, Dr. Michal Kosinski, Dr. David Stillwell, Dr. Luning Sun, Fiona Chan and Aiden Loe from University of Cambridge, United Kingdom

- Dr. Fang Luo and Prof. Hongyun Liu from Beijing Normal University, China

- Dr. Haniza Yon from MIMOS National R&D Centre in ICT, Malaysia

- Dr. Yoram Bachrach, Dr. Pushmeet Kohli and Dr. Thore Graepel from Microsoft Research Cambridge, United Kingdom

- Dr. Ricardo Primi from University of São Francisco, Brazil

- Dr. Jan Stochl from University of York, United Kingdom

- Dr. Andrew Bateman from the Oliver Zangwill Centre for Neuropsychological Rehabilitation, United Kingdom

- and more

- If you have thoughts, ideas or hypotheses about the ways that existing items could be improved, we encourage you to test them and share the results of your efforts with the larger community.

- If you have items which you would like to contribute, please contact us so that we can share this information with other researchers (your authorship would, of course, be maintained). For the foreseeable future, we do not intend to set forth formal procedures for including new item types with the exception that new items must have been validated in some generalizable context.